Before we begin, I’d like to stress that this project started before the release of GPT-4o.

Objective

- Create a cross-platform application that allows users to converse and hone their language skills

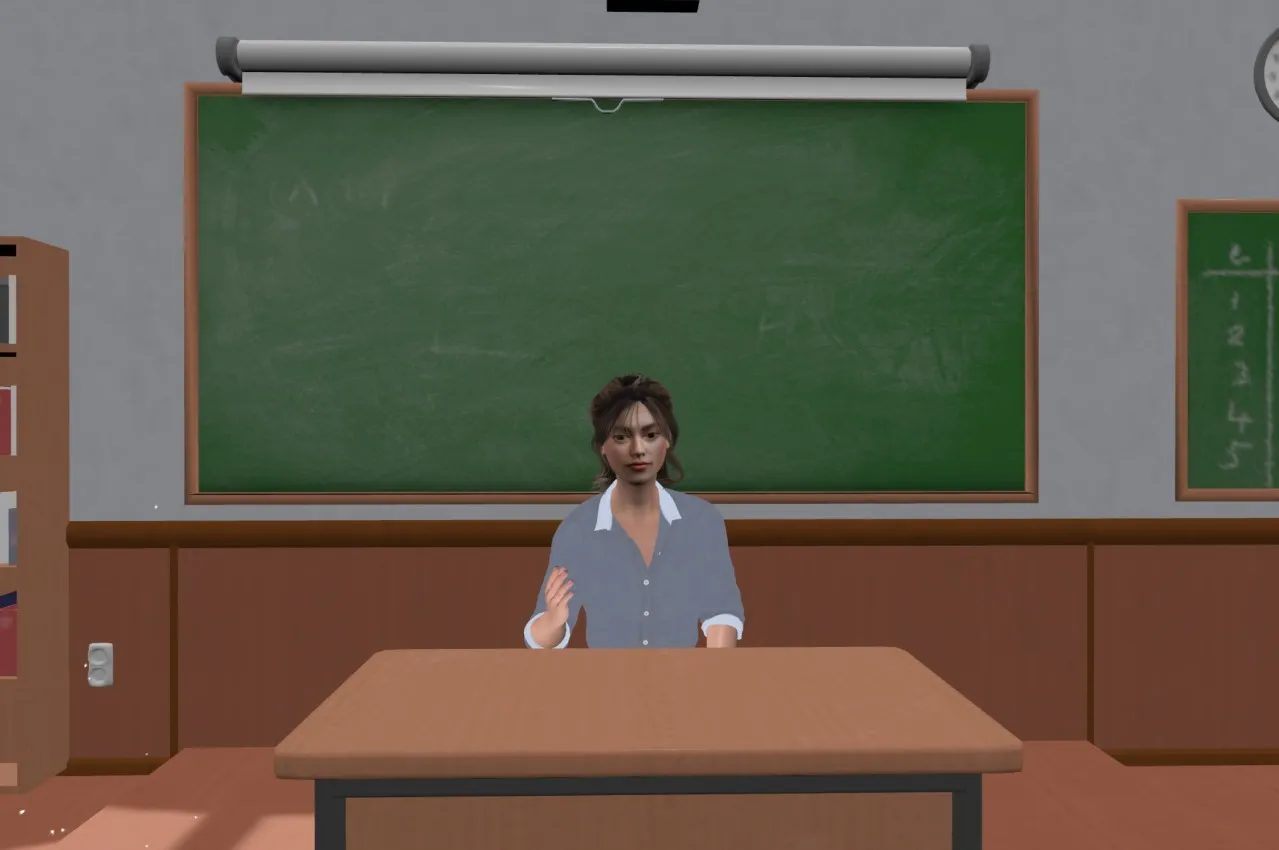

- There should be a 3D avatar that will be representing the teacher and the focus would be on conversing with this avatar.

- The conversations will be driven by voice and audio primarily

- There should be a way to edit prompts for the AI to help guide the AI better

- The user should be able to switch to a mobile VR for a more immersive experience

- Indian-ise the experience. Since that is the market we wanted to target initially.

Challenges & Execution

Since this was a cross-platform application, we decided to go with Dart & Flutter based on people’s experiences with the framework, as opposed to React Native.

The app was going to have around 5 screens — login/signup, home, history/profile, chat, & VR.

Since the core of the application was the Chat, that is what we implemented first. To make a successful conversation, the code would need to flow through three components:

- Speech-to-Text: For understanding what the user was saying

- Response Generation: To generate a response for what had been said

- Text-To-Speech: To generate an audio response, to be said by the avatar

For the first iteration, we got things working using ChatGPT’s API for everything. However, we quickly ran into 2 issues. First, none of ChatGPT’s voices sounded remotely Indian, and second, ChatGPT’s Speech-To-Text did not pick up the Indian accent. The words it gave, while similar sounding, produced incoherent sentences.

To tackle the second issue, speech-to-text, we switched services to Deepgram, but ran into similar, accent related, issues.

What was baffling was that these issues existed despite years of work by companies like Google on Google Assistant, and Apple on Siri, albeit maybe Siri is not the best example of this. But that did light a blub! Could we use the modern smartphone’s built in Speech-to-Text APIs to handle this?

No.

Accent issues, we tried. :(

This was surprising because the same modules picked up our accents well enough when using Google Assistant. Maybe it had to do with the fact that things spoken to the assistant were specific commands and usually only one or two phrases long. But when conversing, the sentence structure is different, and the length of spoken sentences increases considerably (don’t quote me on this). Who even knows.

What did end up working was Google’s Speech-to-Text service on the Google Cloud Platform (surprise, surprise). This picked up the accent well, with very few errors to speak of. So now, we had a working Speech-to-Text, a working response generation module, and it was time to tackle Text-to-Speech.

Text-to-Speech was a challenge during the initial stages itself due to the real-time nature of the responses. The audio was streamed in through web sockets and had to be streamed out to the audio module, which did not like this. We had to dig deep into the source code to figure out how to make a streamed audio response work. In fact, the lack of proper documentation on the audio modules was an issue we faced throughout the development process.

For Text-to-Speech, we again tested multiple services, most of them were rejected because they did not stream a response back, and only gave entire audio files. After much trial and error, we landed on AWS Polly for the Text-to-Speech service.

To abstract things away from the client, the final bit of architecture we implemented was creating a server we could hit for the response generation, STT, and TTS. We built it in Node.js and hosted it on Google Cloud Run using Docker.

The only then remained was the 3D avatar (and the login/signup, home screen, profile — but those are topics for another time). We implemented the 3D avatar by hosting a static site, and using JS interop in Flutter to switch the site between different states. This included making a small Finite State Machine for the site, but that was easily implemented.